Information

Publication

| 2021.02.18 |

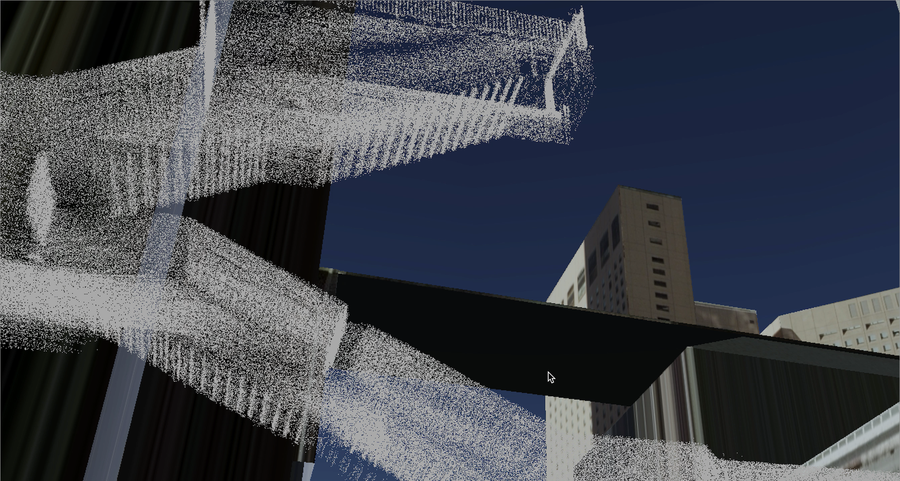

Toru Kouyama, Eri Tatsumi, Yasuhiro Yokota, Koki Yumoto, Manabu Yamada, Rie Honda, Shingo Kameda, Hidehiko Suzuki, Naoya Sakatani, Masahiko Hayakawa,Tomokatsu Morota, Moe Matsuoka, Yuichiro Cho, Chikatoshi Honda, Hirotaka Sawada, Kazuo Yoshioka and Seiji Sugita <Abstract> Accurate measurements of the surface brightness and its spectrophotometric properties are essential for obtaining reliable observations of the physical and material properties of planetary bodies. To measure the surface brightness of Ryugu accurately, we calibrated the optical navigation cameras (ONCs) of Hayabusa2 using both standard stars and Ryugu itself during the rendezvous phase including two touchdown operations for sampling. These calibration results showed that the nadir-viewing telescopic camera (ONC-T) and nadir-viewing wide-angle camera (ONC-W1) experienced substantial variation in sensitivity. In particular, ONC-W1 showed significant sensitivity degradation (~60%) after the first touchdown operation. We estimated the degradations to be caused by front lens contamination by fine-grain materials lifted from the Ryugu surface due to thruster gas for ascent back maneuver and sampler projectile impact upon touchdown. While ONC-T is located very close to W1 on the spacecraft, its degradation in sensitivity was only ~15% over the entire rendezvous phase. If in fact dust is really the main cause for the degradation, this lighter damage likely resulted from dust protection by the long hood attached to ONC-T. However, because large variations in the absolute sensitivity occurred after the touchdown events, which should be due to dust effect, uncertainty for the absolute sensitivity was rather large (3-4%). On the other hand, the change in relative spectral responsivity (i.e., 0.55-μm-band normalized responsivity) of ONC-T was small (1%). The variation in relative responsivity during the proximity phase has been well calibrated to have only a small uncertainty (< 1%). Furthermore, the degradation (i.e., increase) in the full width at half maximum of the point spread function of ONC-T and W1 was almost negligible, although the blurring effect due to dust scattering was confirmed in W1. These optical degradations due to the touchdown events were carefully monitored as a function of time along with other time-related deteriorations, such as the dark current level and hot pixels. We also conducted a new calibration of the flat-field change as a function of the detector temperature by observing the onboard flat-field lamp and validating with Ryugu's disk images. The results of these calibrations showed that ONC-T and W1 maintained their scientific performance by updating the calibration parameters. |

| 2020.11.04 |

Transfer Learning With CNNs for Segmentation of PALSAR-2 Power Decomposition Components

<Abstract> |

| 2020.04.01 |

Subir Paul, Vinayaraj Poliyapram, Nevrez İmamoğlu, Kuniaki Uto, Ryosuke Nakamura, D. Nagesh Kumar <Abstract> |

Researcher Profile

Toru Kouyama

Remote sensing, Planetary Meteorologyt.kouyama[at]aist.go.jp

Hirokazu Yamamoto

hirokazu.yamamoto[at]aist.go.jp

Yuri Nishikawa

nishikawa.yuri[at]aist.go.jphttps://yurinishikawa.github.io/index.html

Ali Caglayan

Computer Vision, Artificial Intelligence, Deep Learning, Robotics.ali.caglayan[at]aist.go.jp

Atsushi Oda

x-oda[at]aist.go.jp

Ryosuke Nakamura

Planetary Science,Satellite remote sensingr.nakamura[at]aist.go.jp

Chiaki Tsutsumi

Geoinfomation servicetsutsumi.chiaki[at]aist.go.jp

Soushi Kato

Remote Sensingkato.soushi[at]aist.go.jp

Yosuke Ikeda

yosuke.ikeda[at]aist.go.jp

Ryo Ito

itou.ryo[at]aist.go.jp

Yuya Arima

y-arima[at]aist.go.jp